Ethereum: Python ThreadPoolexecutor closes the one -ending tasks

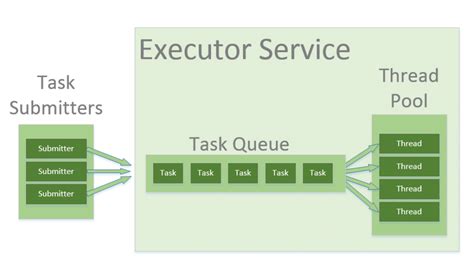

At the existence of the class Threadpoolexecutor in python is important to manage connections to escape the problem with a single -legged completion of tasks. In this state, we dissatisfied with the problem, which can be considered as a history of historical data from the binance api and the them in the basis of data Postgresql with the use of threadpoolex.

background: Why connecting closes

Class Threadpoolexecutor puts up the deduction for every task that can be closed to the presence of connectivity, if it is not rightfully handled. This is what: ::

- Every task (for example, the historical data on the shadows) can open the connection with API Binance.

- The sweat, the subject for closed connectivity, when it is filled with his task, can come out, in the presence of all the tasks will be dushed.

Problem: Close Connections Above Completion of Tasks

In your walls, you ask you to reach the historical data of cryptocurrencies and to cohabit them in the database of PostgreSQL, using threadpoolexecutor the follow -up:

`Python

Import configuration

That's the binance.Client Import Client

Import PSYCOPG2

Def Fetch_data ():

Invited Binance API

Client = Client (config.binance_api_key, config.binance_api_secret)

Response = Client.get_order_Book ()

Hunt data in the database of postgresql

Conn = Psycopg2.connect (

Host = config.db_host,

User = config.db_user,

Password = config.db_password,

Database = config.db_name

)

Cur = Conn.cursor ()

For the item B answer ['ALDS']:

Cur.execute ("insert in the significance of Historical_data (Simvol, Price) ( %S, %S), (Item ['ID'], Item [PRICE ']))))

Conn.commit ()

Conn.close ()

Def Main ():

Threads = []

For _ in Diopazon (config.num_threads):

Thread = Thread (Target = fetch_data)

Threads.Appnd (a sweatshirt)

Thread.start ()

If __name__ == '__main__':

the base ()

In this primer function fetch_data proves connecting with the database of Postgresql and is made in it. The top does not, Threadpoolexecutor creates a few ceiling for the attachment of historical data on the vices of the binance API. In addition to every sweat, it closes the connection after its tasks, the powered sweat is asked to mow the second task, does not want to finish.

Resolution: Using Condurnt.futures

To solve this problem, you can use the module concurrent.futures, which provides high -level interface for asynchronous channels. Here’s a conjugated code fragment:

`Python

Import configuration

That's the binance.Client Import Client

Import PSYCOPG2

from Concurrent.future Import Threadpoolexecutor

Def Fetch_data ():

Invited Binance API

Client = Client (config.binance_api_key, config.binance_api_secret)

Response = Client.get_order_Book ()

Hunt data in the database of postgresql

Conn = Psycopg2.connect (

Host = config.db_host,

User = config.db_user,

Password = config.db_password,

Database = config.db_name

)

Cur = Conn.cursor ()

For the item B answer ['ALDS']:

ask:

Cur.execute ("insert in the significance of Historical_data (Simvol, Price) ( %S, %S), (Item ['ID'], Item [PRICE ']))))

PSYCOPG2.ERROR KOM

PRINT (F 'PROVERAGE LOOKS: {E} ")

Conn.commit ()

Conn.close ()

Def Main ():

with threadpoolexecutor (max_workers = config.num_threads) in the cure of use:

Executor.map (Fetch_data, Range (Config.num Threads))

If __name__ == '__main__':

the base ()

In this watered fragment of the code, we use ThreadPoolexecutor for the management of the sinks and ashronate to fill the tasks. Function fetch_data is caught with Diopazon Indexes from 0 toconfig.num ceiling - 1. This guarantees that every sweat entertains their tasks one -way, not as well, when the other sweats are completed.

conclusion

Uspolza `parallel.

VN

VN